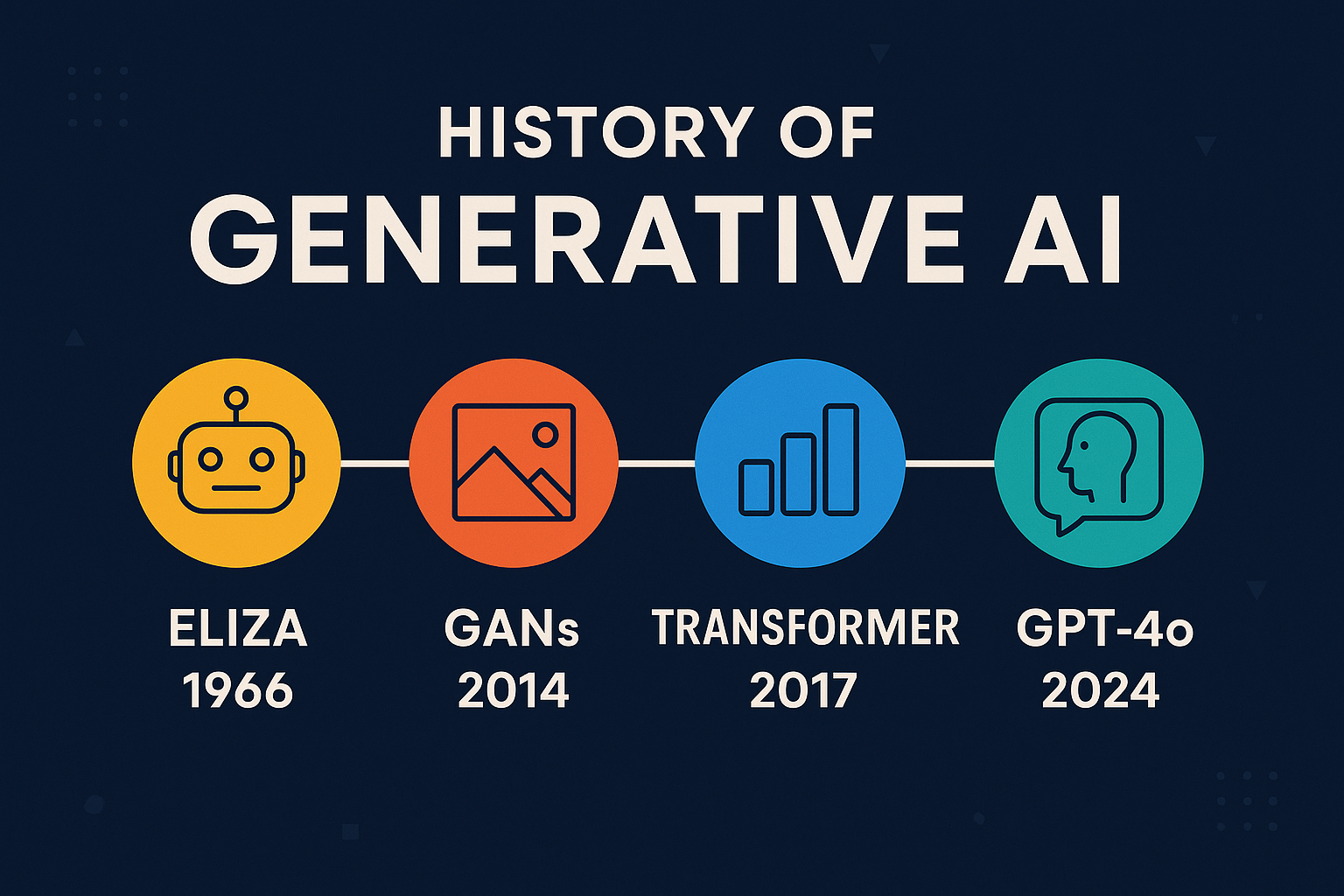

The Fascinating History of Generative AI — From ELIZA to GPT-4o

Generative AI feels like a breakthrough technology of the 2020s, but its origins stretch back many decades. The journey of Generative AI is not just a tale of modern Silicon Valley startups, but a fascinating timeline of scientific research, mathematical models, and persistent human curiosity. This article walks you through the most important historical milestones that have shaped the powerful Generative AI tools we see today.

The Very Beginning: Alan Turing and the Turing Test (1950)

The story of Generative AI cannot begin without mentioning Alan Turing. In 1950, British mathematician and computer scientist Alan Turing proposed a provocative question in his paper “Computing Machinery and Intelligence”: Can machines think? This led to the famous Turing Test, where a machine is considered intelligent if it can converse with a human without being detected as a machine.

Although no machine at that time came close to passing this test, Turing’s proposal laid the conceptual foundation for Artificial Intelligence (AI) as a field. His vision inspired generations of researchers to explore whether machines could simulate human thought, leading eventually to conversational AI systems.

The Early Days: ELIZA and Rule-Based AI (1960s)

In 1966, Joseph Weizenbaum, a computer scientist at MIT, developed one of the first examples of a conversational program called ELIZA. ELIZA simulated a Rogerian psychotherapist by using simple pattern-matching rules. While ELIZA had no true understanding of language or context, it cleverly rearranged user inputs into scripted responses that often fooled users into thinking they were speaking to an intelligent agent.

ELIZA’s success revealed how even simple algorithms could create the illusion of understanding. Despite its limitations, ELIZA captured public imagination and marked one of the first major steps towards developing interactive, generative AI systems.

The Rise of Statistical Models: Markov Chains and Early Neural Networks

The next several decades saw researchers experimenting with statistical models to predict language and process data:

- 1906: Markov Chains

Russian mathematician Andrey Markov developed statistical models that predicted future events based on previous events. Markov Chains became a foundational tool in language modeling, as they could predict the likelihood of a word following another based on past data. - 1950s–1980s: Neural Networks

Early neural networks mimicked how biological neurons transmit information. The Perceptron was an early attempt to create machines that could learn from data. However, neural networks of this era struggled with complex problems due to limited computing power and theoretical limitations. - 1997: Long Short-Term Memory (LSTM)

Sepp Hochreiter and Jürgen Schmidhuber introduced LSTM networks, a major breakthrough for processing sequences like speech and text. LSTMs could remember information across long sequences, solving many problems that earlier neural networks could not handle. This laid critical groundwork for more advanced language models to emerge later.

2014: Generative Adversarial Networks (GANs) Change the Game

In 2014, Ian Goodfellow and his team introduced Generative Adversarial Networks (GANs). GANs consist of two neural networks:

- Generator: Creates synthetic data.

- Discriminator: Tries to distinguish real data from synthetic data.

These two networks compete, and through this competition, the generator becomes better at creating highly realistic synthetic data, particularly images. GANs revolutionized fields such as image generation, deepfake technology, and computer vision.

For the first time, machines could generate highly convincing fake images, art, and even video clips. GANs introduced the world to what it truly meant for AI to “generate” content.

2017: The Transformer Revolution Begins

While GANs were making headlines in image generation, another groundbreaking development emerged in natural language processing: the Transformer architecture.

In 2017, a team at Google Brain introduced a research paper titled “Attention is All You Need.” The Transformer model introduced a novel attention mechanism that allowed AI models to process entire sequences of text at once, rather than one word at a time. This architecture was far more efficient and accurate for handling complex language tasks.

The Transformer architecture laid the foundation for today’s most powerful Generative AI models. It was the secret sauce that allowed large language models (LLMs) to truly understand and generate human-like text at scale.

The Modern Generative AI Boom: 2018–2025

Following the Transformer breakthrough, the pace of development in Generative AI accelerated dramatically, especially in the domain of natural language processing. Let’s explore the key milestones:

GPT Series (OpenAI)

- 2018: GPT-1

OpenAI introduced Generative Pre-trained Transformer 1 (GPT-1), which used unsupervised learning to predict the next word in a sentence, showing early promise in generating coherent text. - 2019: GPT-2

GPT-2 surprised researchers with its remarkable ability to generate paragraphs of human-like text. OpenAI initially withheld its full release, fearing misuse due to its potential for generating misinformation. - 2020: GPT-3

GPT-3 was a game changer. With 175 billion parameters, it exhibited astounding abilities to answer questions, write essays, code, and simulate conversations. GPT-3’s capabilities attracted enormous attention from businesses, researchers, and the public. - 2022: ChatGPT (powered by GPT-3.5)

OpenAI launched ChatGPT, making AI accessible to millions worldwide. It was widely adopted by freelancers, students, content creators, and businesses for tasks ranging from writing assistance to customer service. - 2023: GPT-4

OpenAI’s GPT-4 introduced better reasoning, fewer hallucinations, and greater factual accuracy. It expanded the use cases of generative AI in education, content creation, legal research, software development, and more. - 2024: GPT-4o

GPT-4o represented a major leap into real-time, multimodal AI that could process text, images, audio, and even live conversations. It brought AI closer to real-time assistants capable of complex, multi-modal interactions.

Image Generation Models

While text-based models advanced rapidly, image generation models also saw significant breakthroughs:

- 2021: DALL-E (OpenAI)

DALL-E could generate imaginative images from text prompts, demonstrating creative visual capabilities. - 2022: Midjourney

Midjourney emerged as a popular tool among digital artists and designers for creating stunning AI-generated visuals. - 2022: Stable Diffusion (Stability AI)

Stability AI released Stable Diffusion, an open-source image generation model that allowed widespread access to advanced image generation without proprietary restrictions.

Other Major Players in Generative AI

Beyond OpenAI, other companies began launching their own powerful Generative AI tools:

- 2023: Gemini (Google)

Google’s Gemini model (previously Bard) entered the AI arena, leveraging Google’s vast data resources and integrating deeply with Google Workspace. - 2024: Claude 3 (Anthropic)

Anthropic introduced Claude 3, focusing on safety, transparency, and ethical AI use while delivering highly capable natural language generation.

Key Milestones Timeline

| Year | Milestone | Developer |

|---|---|---|

| 1950 | Turing Test | Alan Turing |

| 1966 | ELIZA Chatbot | Joseph Weizenbaum |

| 2014 | GANs | Ian Goodfellow |

| 2017 | Transformer | Google Brain |

| 2018 | GPT-1 | OpenAI |

| 2019 | GPT-2 | OpenAI |

| 2020 | GPT-3 | OpenAI |

| 2021 | DALL-E | OpenAI |

| 2022 | Midjourney | N/A |

| 2022 | Stable Diffusion | Stability AI |

| 2022 | ChatGPT | OpenAI |

| 2023 | GPT-4 | OpenAI |

| 2023 | Gemini | |

| 2024 | Claude 3 | Anthropic |

| 2024 | GPT-4o | OpenAI |

The Ongoing Journey of Generative AI

Generative AI has evolved from simple rule-based systems like ELIZA to today’s sophisticated, multimodal, real-time models like GPT-4o. Each breakthrough builds on decades of research across multiple disciplines: computer science, linguistics, statistics, and neuroscience.

For Indian freelancers, students, content creators, and small businesses, Generative AI offers tremendous opportunities:

- Freelancers can automate content creation, customer support, and digital marketing.

- Students can use AI tutors, research assistants, and study tools.

- Content Creators can generate unique text, images, and videos at scale.

- Small Businesses can enhance productivity, automate tasks, and offer better customer experiences.

Understanding the history of Generative AI helps us appreciate both its vast potential and its ethical challenges. As we move forward, issues like bias, misinformation, privacy, and AI safety will become increasingly important.

Generative AI is no longer science fiction. It is here, reshaping industries and empowering individuals. The next decade promises even more innovation as research pushes the boundaries of what machines can create and understand.

Conclusion

The history of Generative AI is a testament to human ingenuity, perseverance, and collaboration. From the humble beginnings of ELIZA to the transformative power of GPT-4o, this journey showcases how far we have come in teaching machines to generate content, simulate conversations, and assist humans across countless tasks. As we stand on the brink of even greater AI capabilities, understanding this rich history offers valuable insight into where we are heading next.

👉 Check out this great article on Best Generative AI tools you can use.